[Python] Machine Learning Intro #1 - Hello World

The Machine Learning Intro series is going to be a series about Machine Learning and will cover the very basics of what Machine Learning actually is and how to implement it using Python.

What is SciKit-Learn?

SciKit-Learn is a Python module for Machine Learning. It provides simple and efficient tools for data mining, data analysis and various classification, regression and clustering algorithms.So in short - it makes our lives a lot easier dealing with Machine Learning, because we don't have to write all those algorithms by hand.

And that's why we will use it here.

What is Machine Learning?

You can think of it as a subfield of Artificial Intelligence (AI). The algorithm learns from experience and examples - like a human does. So with Machine Learning, you can solve problems you couldn't solve with regular hard coded rules.

For example, given the case, you have to write a program that takes images of apples and oranges as input and then it has to determine whether it is an apple or an orange. That would be impossible to archive without Machine Learning.

You would have to write a ton of rules for just one particular case. You could probably read the color of the image to compare the amount of orange pixels to the red ones, but what if it is a grey scale image? Or when there are no fruits in the image at all? Other case would be, if you want to add a new kind of fruit to the list of fruits you want to compare. You would have to rewrite and define all the rules just for one new particular case.

And here comes Machine Learning into play. We let the algorithm figure out the rules for us, so we don't have to write them by hand.

For that, we will train a Classifier.

You can think of it as a function that takes some data as input, and assigns labels to it as output.

For example, you have a picture and you want to classify it as an apple or orange, or you have an e-mail and you want to classify it as spam or not spam.

The way to write the classifier automatically is called Supervised Learning. So Supervised Learning creates a classifier by finding patterns in examples.

Supervised Learning

Supervised Learning is split into three steps:

collecting the training data, with that data as input, the classifier will be trained and based on that, it will make it's predictions as output.

Collect Training Data

Step one is to collect the training data, these are examples of the problem we want to solve. In our case, this would be a function that classifies a fruit. It will take a description of the fruit as input and then it will predict, based on features whether it is an apple or an orange as output. A feature can be anything that describes the object you want to classify. In our case this can be the texture of the fruit and it's weight.

For this example, we will simplify the training data for demonstrating purposes and write down some measurements of oranges and apples:

In Machine Learning, these measurements are called Features. In our simplified example we have just two: weight and texture. So we have weighed some oranges and apples and have written it down. And we know that the apple's texture is smooth and the orange's texture is bumpy.

Good features makes it easier to discriminate between different types of fruits. Each row of our table is an example and describes one piece of fruit.

The last column is called a Label. It identifies what type of fruit is in each row. In our example there are just two: apples and oranges.

The whole table is our training data and is being used to train our Classifier. The more training data you have, the better the Classifier will be.

Ok, so let's write some code.

At first, we have to import the module SciKit-Learn:

import sklearn

Now, we will bring our table, representing the training data, to code:

features = [[120, "smooth"], [130, "smooth"], [165, "bumpy"], [170, "bumpy"]] labels = ["apple", "apple", "orange", "orange"]

"features" contains the first two columns: weight and texture and "labels" contains the last column and determines the type of fruit.

Features are the input of the classifier and labels are the desired output.

Seems to be pretty easy right?

Due to the fact that SciKit-Learn uses real-valued features, we have to change the above code a bit, to make it valid:

features = [[120, 1], [130, 1], [165, 0], [170, 0]]

For "bumpy" we will use 0, and for "smooth" we will use 1.

The same applies to labels, so let's change it as well:

labels = [0, 0, 1, 1]

For "apple" we will use 0 and for "orange" we will use 1.

Train the Classifier

Step two of Supervised Learning is to train the Classifier.

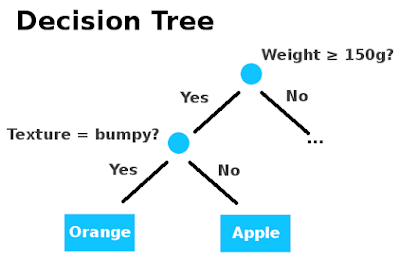

The type of Classifier we will use here is a so called Decision Tree.

I will go more In-depth of how this works in the future posts of this series, for now, it's enough to think of a Classifier as a box of rules.

There are many different types of Classifiers, but the input- and output-type is always the same.

At first, we need to import the tree-functionality from the SciKit-Learn module, so we change the import statement to:

from sklearn import tree

Then we go and create the Classifier:

clf = tree.DecisionTreeClassifier()

At this point, this is just an empty box of rules, so it doesn't know anything about apples and oranges yet.

To train it, we need a Learning-Algorithm.

When you think of a Classifier as a box of rules, then you can think of a Learning-Algorithm as the procedure that creates them.

It does that by finding patterns in your training data.

In our case for example, it might notice that oranges tend to weight more.

So it will create a rule that says: the heavier the fruit is, the more likely it is to be an orange.

Luckily, the training algorithm is included in the classifier object and it is called fit.

clf = clf.fit(features, labels)

You can think of fit as being a synonym for finding patterns in the training data.

We will go into more details about this in future post in this series.

Make a Prediction

At this point, we have a trained Classifier.

So let's take it to classify a new fruit:

print(clf.predict([[160, 0]]))

We say, that our new fruit has a weight of 160 kilograms and that the texture is bumpy. What do you guess would be the output?

If all worked fine, the output should be 1, aka orange, because we defined orange as 1.

If that's the case, then congratulations, you have created your first machine learning program!

Here is the complete code in one piece:

from sklearn import tree features = [[120, 1], [130, 1], [165, 0], [170, 0]] labels = [0, 0, 1, 1] clf = tree.DecisionTreeClassifier() clf = clf.fit(features, labels) print(clf.predict([[160, 0]]))

And with these simple statements, you can create a new Classifier for a new problem just by changing the training data.

For example, you could write a Classifier that discriminates between different types of cars. A sports-car has 2 seats and tends to have more horsepower than a normal car. Whereas a normal car tends to have 4 or more seats and less horsepower.

That makes this approach far more reusable than writing new rules for each problem.

You might ask why we used a table of features instead of pictures as training data to describe our fruits.

To answer your question: you can use pictures as training data as well and we will get into that in future post of this series.

The way we used here is more general as you will see later on.

Conclusion

That wasn't difficult right?

That's because programming with Machine Learning isn't hard, but to get it right, you need to understand a few important concepts.

We will work through all those in the future posts, so stay tuned! :)

This post was inspired by the posts of Google Developers

Please check this link

ReplyDeleteMynews8

Best Baby hooded Bath towels

Best organic Baby Bath towels

Best infant Baby Bath towels

top Best Baby Bath towels

Best and less Baby Bath towels